On Generating Music

No, I don’t mean by using AI. That’s lazy at best; slop, theft, and just plain unethical at worst.

AXIS, Jacob Motl, 2025.

My latest album, AXIS, was created through generative methods. This has been (for the past decade) and continues to be a topic of interest to me. AI is not an avenue I even considered, nor would I consider it.

About 10 years ago I became interested in at least the idea of generating music. I value discovery in many facets of life, from playing games to talking with people to creating, and I became fond of the idea of being exposed to the unexpected in my own music (as opposed to manually architecting my music based on my own sketches).

Some brief history: generative music has been around for at least the last century, explored by the likes of John Cage (Music of Changes, created by using the I Ching or Book of Changes, a Chinese book of divination), Brian Eno (Lateral; several albums using varying generative techniques, as well as visual light pieces, interactive apps, and his “oblique strategies”), 65daysofstatic (the soundtrack for No Man’s Sky, a sci-fi video game which also uses generative systems), and even the field music for The Legend of Zelda: Breath of the Wild.

TL;DR. Made from technology or not, generative music has been around for a while.

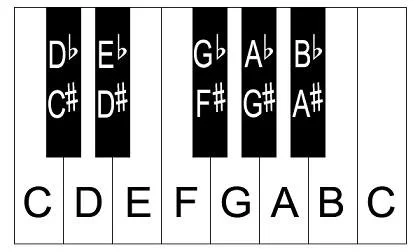

Despite my curiosity for the better part of a decade, I never made a grand foray into its possibilities. I had been compelled from the outset to create such music using technology; however, I did not have the resources to access the technology (time, money, or technological prowess to create my own program). Over the years, though, I certainly explored analog methods, the most prominent stemming from my love of gaming by using various types of dice (an 8-sided die to generate notes within a standard scale, or a 12-sided die for the 12 half-step notes in an octave). To date, these have only been explorations.

From C to C using only white keys totals 8. Adding the black keys totals 12 (excluding the second C).

It’s interesting: in the last north-of-a-handful of years, I’ve been slowly chipping away at learning video game development, with the caveat that I do not carry coding or computer programming in my background. The key word here is learning. Programming is far from easy and, although I have a better understanding of it every year, I have barely scratched the surface.

About a year ago, I came across a neat free lil piece of software called Sonic Pi. It is a code-based music creation tool initially intended for live DJs (that’s a weird one) with its live playback feature for updating the code in real time (that’s an even weirder one). Immediately I saw some spark of potential in using the software - and it’s free - so I downloaded it and gave it a solid play session: messing around with the included sounds, editing the pre-built scripts, even making my own. All the while, pressing play to see - or rather, hear - the music being played back. Super cool, yeah?

Well then it dawned on me: You silly goose, you can create scripts that generate music!

Next thing I know, I set to work figuring out how to go about doing such a project. In programming, there are approximately one metric megazillion ways to do one thing. Analysis paralysis, anyone? Just me? Okay.

Through months of tinkering and trial and error and building and tearing down and rebuilding dozens of versions of scripts, I landed on a version of the idea I liked, which eventually grew into AXIS.

Brian Eno describes (through my paraphrasing) this trial-error process rather well: what you put in must be carefully and painstakingly curated for there to be an intriguing output. This was core. I wanted to keep the project simple, using only one or two instruments (for an easier time mixing later on). I spent a few days creating what I call “note sets” for AXIS. These are sets of a few notes grouped together by how it would function vertically (that is, harmonically at the same time) within the music.

Since that was the case, I had to make sure every note would sound good together because I had no way of knowing which notes would play in sync (not the boy band), and so I had to account from the get-go of any significant dissonances that would sound “wrong.” Or “ugly.” Or “OMG why would I write such burning rubber sludge? (Cue: throw up.)”

So I literally put pencil to paper to “map out” the complete harmonies for each of the 12 pieces, quadruple checking that everything within a piece would sound fine with… well, everything else within the piece.

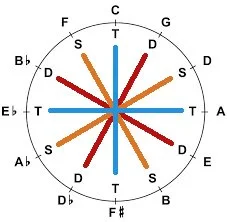

The Circle of Fifths

In short, chords of the same color may be substituted for each other.

As an aside, I based the entire album on Béla Bartók’s axis theory. Each track is in its own of the 12 keys, and the tracks are ordered in such a way that the music makes sense harmonically when played in sequence, including the last to first tracks. The album is named after the axis theory, and the cover art is my interpretation of the harmonic axis.

After that, I created the scripts in Sonic Pi for each piece. These set the parameters that would be used during playback: pitches to be played, pitch duration, rest duration, when pitches would be played and in what order, when rests would be played, and so on. More trial and error.

Then came the recorded playback. Sonic Pi plays back (and, optionally, records the music) in real time, which was the deciding factor in using only two instruments: less time taken to record, less to mix. Factoring in weird hiccups in the scripts and just plain not liking a version of a recording, it took a while to get everything recorded.

The mixing stage was where it all came together (see also: water is wet). In brief, through effects processing, the instruments’ sound quality went from just-better-than-MIDI (ie: clearly fake) to “believable instrument run through effects.” I am happy with the result.

As Eno describes, the curated inputs really are the load-bearing support for generative works. Each step in creating AXIS demanded careful consideration: the note sets, the scripts and how they would function, and the decisions made when mixing.

I mentioned earlier that I value discovery. Creating AXIS was certainly a new process for me, and it came with many layers of discovery - more than I could have imagined before creating it. Part of that discovery (or maybe it’s actually a reminder) is in recognizing how a seemingly small choice, such as one or a few notes, greatly affects the end result. If I hadn’t been careful about my note choices or how they were arranged together, the entire process of creating AXIS would have been longer. Or the music would have turned out “ugly” and dissonant. Or maybe I would have abandoned the music altogether.

There is certainly application here to everyday life. What may seem like a mundane choice (from grabbing Taco Bell the night before your big interview [risky decision] to holding the door for a stranger [kind act]) could have greater and likely unforeseen impacts. Maybe we experience the outcomes, or maybe not. But either way, the outcome(s) will exist.

My takeaways from creating AXIS: I enjoy this type of composing and will continue to explore its possibilities; I can steer the work and the work can steer me - both of those can be true at the same time; and, quite possibly the most important lesson, that we would all do well to be more intentional about curating the inputs of our lives.